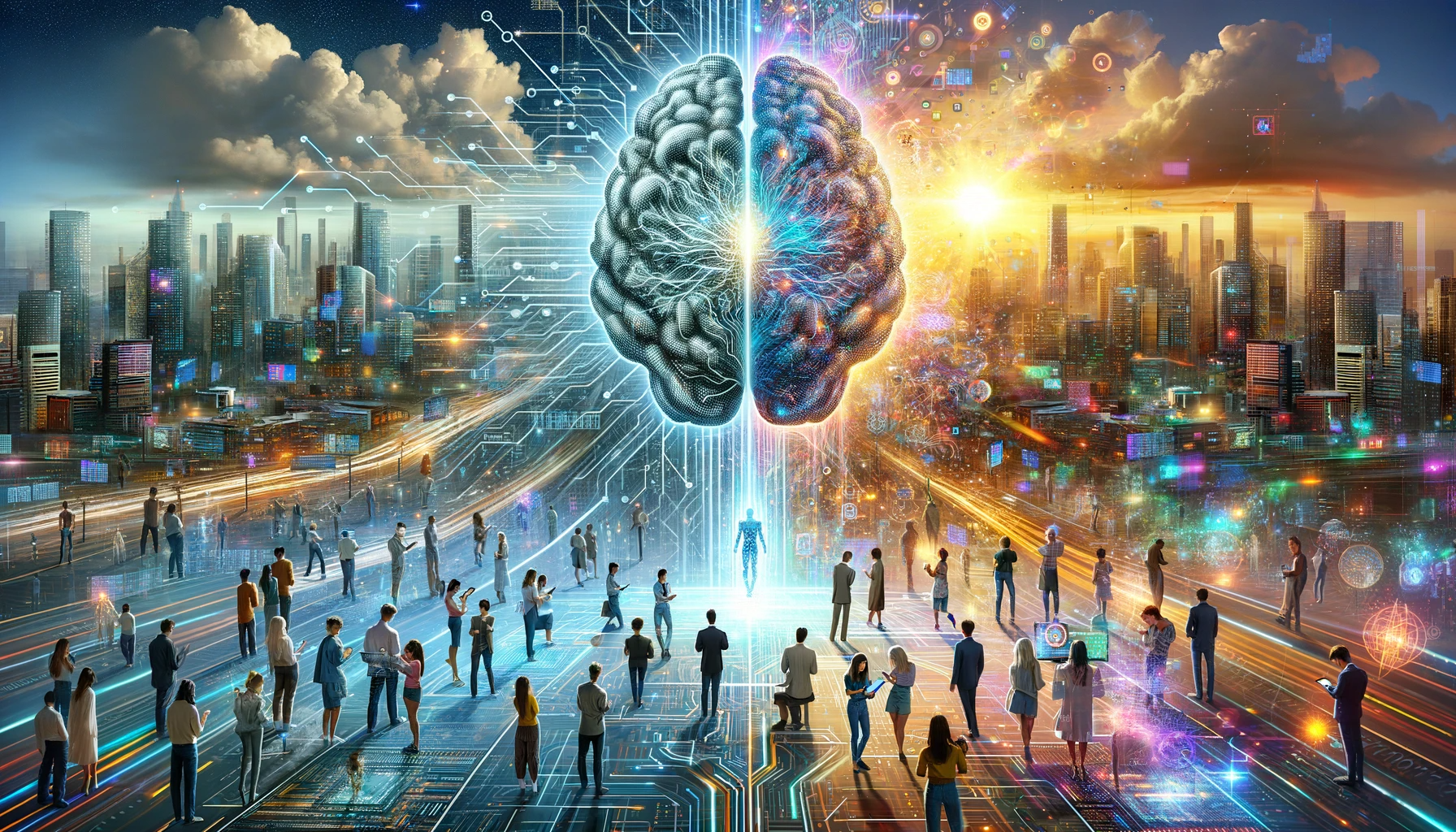

In the AI-driven post-truth era, distinguishing between fact and fiction becomes challenging. As AI blurs the lines of truth, it raises crucial questions about information trust and credibility, reshaping our approach to knowledge and its validation.

The thrilling rise of artificial intelligence has unleashed a whirlwind of possibilities, transforming our understanding of truth and knowledge in ways both awe-inspiring and concerning. Imagine AI language models like ChatGPT—an embodiment of conversational prowess, adept at conjuring text, answering queries, and chatting away with human-like fluency.

Yet, amid this technological marvel, there’s a twist of unease. The deluge of AI-generated content blurs the once-crisp lines between fact and fiction, creating what I term the “accumulation of ambiguities.” Suddenly, everything seems plausible, yet the bedrock of truth appears shrouded in uncertainty. Here’s the kicker.

Our traditional Western definition of truth hinged on meticulous fact-checking and rigorous debates to validate information. Enter AI language models, which operate on absorbed patterns and data, ushering in an era where “likely sources” take precedence over verifiable, cited ones.

Drawing from colossal internet datasets, these AI models shine in generating contextually accurate text. However, their Achilles’ heel lies in the inability to cite specific sources or provide concrete evidence, creating a realm where correctness may reign superficially but lacks the depth of factual grounding.

This quirk explains why AI models often conjure historically plausible yet factually incorrect responses—they lack that human spark of true understanding, stringing words together based on associations rather than genuine comprehension.

This transition towards probabilistic content challenges our classic pursuit of empirically validated truth. The repercussions ripple beyond academic debates; they seep into the very fabric of information credibility, gnaw at institutional trust, and shake the foundations of governance structures.

Picture a world where AI-generated content becomes the alpha source of information. Its sheer accessibility might nudge users into a comfort zone of acceptance without vetting origins or accuracy, discarding the age-old tradition of verification.

The amalgamation of AI and human-authored content blurs the once-distinct boundaries between fact and fiction, significantly influencing society, communication, and knowledge sharing. Trust in established authorities dwindles in this “post-truth” era, fostering scepticism and potentially jolting democratic systems.

The subjective nature of truth in this arena stokes societal polarisation, throwing wrenches into constructive discourse and paving highways for misinformation campaigns.

An intriguing development in this realm is Meta’s recent call—to restrict certain advertisers from unleashing AI in their campaigns—a stride towards responsible AI usage and safeguarding truth in the digital realm. It’s a potential game-changer in navigating this uncharted territory.

As we tread this evolving landscape, grasping the broader implications is paramount. AI-generated content poses a pragmatic conundrum—how do we gauge trust in information spawned from these models? Its ramifications echo through public discourse, policymaking, and societal frameworks.

The allure of AI-generated content’s ease might tempt users to embrace information at face value, sidelining the conventional practice of verifying accuracy. This fusion of AI and human-authored content challenges us to reassess the core tenets of truth and credibility.

This paradigm shift provokes profound queries: Can a society navigate a world where AI-generated content becomes a pivotal information tool? What happens when the lines blur between AI-generated and human-authored content, making it tricky to decipher fact from fiction?

In this realm, Meta’s decision to set limits for certain advertisers using AI in campaigns marks a crucial acknowledgement of these complexities. By delineating boundaries, it underscores the essence of responsible AI usage in preserving the integrity of information and truth.

Karine is the Managing Director of Midas PR and serves the company as its “Master Connector”.

She has over 20 years of experience driving PR strategy for leading organisations in various sectors, launching Midas PR in 2007 after gathering ideas, honing her skills in Luxembourg, and refining her PR approach by working in Thailand. Over the last 16 years, Midas PR has achieved strong and consistent growth and today is recognised as one of Thailand’s leading multi-award-winning PR and communications firms. Alongside heading up the Midas team, Karine is Chair for the PRCA Thailand and a member of PROI Worldwide.

Karine is also a renowned thought leader and in-demand speaker on industry topics such as reputation management, corporate communications, and diversity and inclusion. She has shared her insights at several leadership, business and PR conferences, such as the Women in Business Series and the Thailand Startup Summit.

A passionate advocate for female leadership, Karin aims to serve as a role model for other women and inspire them to pursue their aspirations. She co-founded The Lionesses of Siam, a distinctive social and business networking group exclusively for women in Thailand. She has been honoured with several awards, including the Prime Award for International Business Woman Of The Year 2023.

![[PR] PR_Ascott and Vimut Hospital_2024](https://www.traveldailynews.asia/wp-content/uploads/2024/04/PR-PR_Ascott-and-Vimut-Hospital_2024-400x265.jpg)